注意

点击这里下载完整的示例代码

07. 在 PASCAL VOC 上训练 YOLOv3¶

本教程将介绍训练 GluonCV 提供的 YOLOv3 目标检测模型的基本步骤。

具体来说,我们将展示如何通过组合 GluonCV 组件来构建最先进的 YOLOv3 模型。

提示

您可以跳过本教程的其余部分,立即下载此脚本开始训练您的 YOLOv3 模型

下载 train_yolo.py 随机形状训练需要更多 GPU 显存,但能产生更好的结果。您可以通过设置 –no-random-shape 关闭它。

示例用法

在 GPU 0 上使用 Pascal VOC 训练默认的 darknet53 模型

python train_yolo.py --gpus 0

在 GPU 0,1,2,3 上使用同步批量归一化训练 darknet53 模型

python train_yolo.py --gpus 0,1,2,3 --network darknet53 --syncbn

检查支持的参数

python train_yolo.py --help

提示

由于本教程中的许多内容与 04. 在 Pascal VOC 数据集上训练 SSD 非常相似,如果您已经熟悉,可以跳过任何部分。

数据集¶

请先阅读此 准备 PASCAL VOC 数据集 教程,以便在您的磁盘上设置 Pascal VOC 数据集。然后,我们就可以加载训练和验证图像了。

import gluoncv as gcv

from gluoncv.data import VOCDetection

# typically we use 2007+2012 trainval splits for training data

train_dataset = VOCDetection(splits=[(2007, 'trainval'), (2012, 'trainval')])

# and use 2007 test as validation data

val_dataset = VOCDetection(splits=[(2007, 'test')])

print('Training images:', len(train_dataset))

print('Validation images:', len(val_dataset))

输出

Training images: 16551

Validation images: 4952

数据转换¶

我们可以从训练数据集中读取图像-标签对

train_image, train_label = train_dataset[60]

bboxes = train_label[:, :4]

cids = train_label[:, 4:5]

print('image:', train_image.shape)

print('bboxes:', bboxes.shape, 'class ids:', cids.shape)

输出

image: (500, 334, 3)

bboxes: (6, 4) class ids: (6, 1)

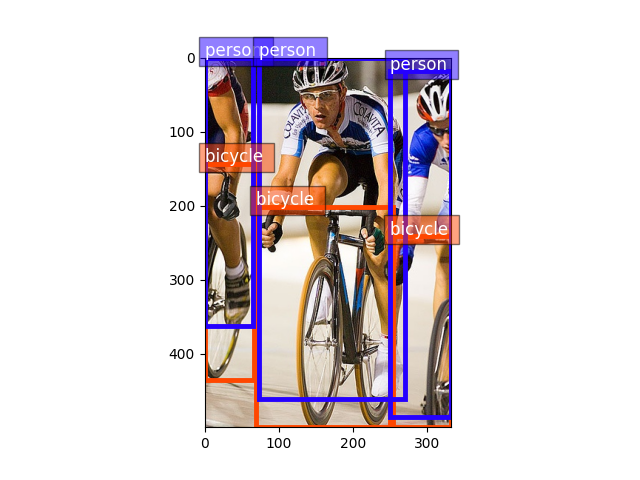

绘制图像及相应的边界框标签

from matplotlib import pyplot as plt

from gluoncv.utils import viz

ax = viz.plot_bbox(train_image.asnumpy(), bboxes, labels=cids, class_names=train_dataset.classes)

plt.show()

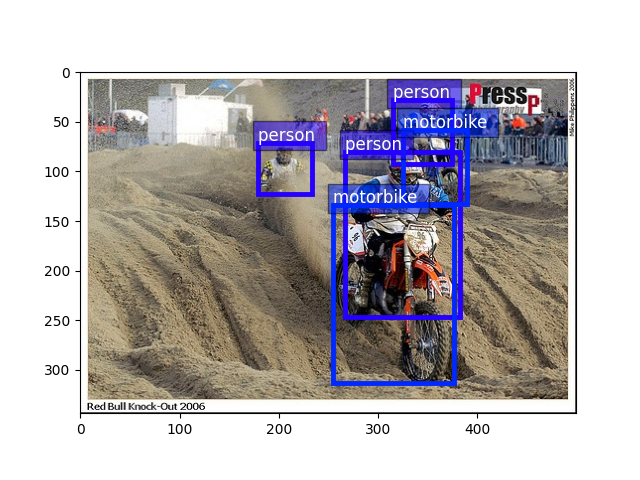

验证图像与训练图像非常相似,因为它们基本上是随机分割到不同集合中的

val_image, val_label = val_dataset[100]

bboxes = val_label[:, :4]

cids = val_label[:, 4:5]

ax = viz.plot_bbox(val_image.asnumpy(), bboxes, labels=cids, class_names=train_dataset.classes)

plt.show()

对于 YOLOv3 网络,我们应用与 SSD 示例类似的转换。

from gluoncv.data.transforms import presets

from gluoncv import utils

from mxnet import nd

width, height = 416, 416 # resize image to 416x416 after all data augmentation

train_transform = presets.yolo.YOLO3DefaultTrainTransform(width, height)

val_transform = presets.yolo.YOLO3DefaultValTransform(width, height)

utils.random.seed(123) # fix seed in this tutorial

对训练图像应用转换

train_image2, train_label2 = train_transform(train_image, train_label)

print('tensor shape:', train_image2.shape)

输出

tensor shape: (3, 416, 416)

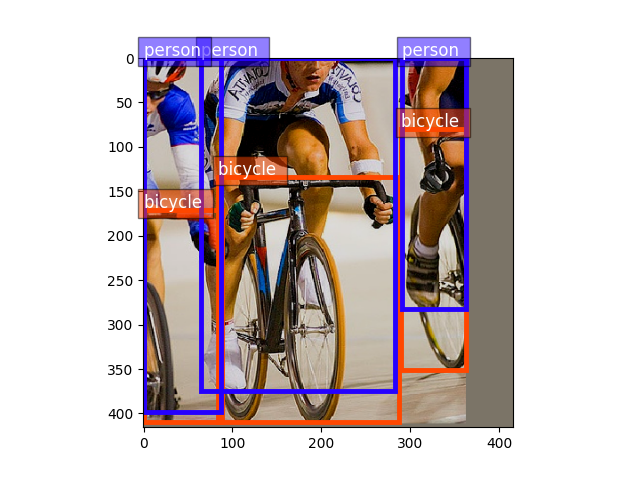

张量中的图像会失真,因为它们不再处于 (0, 255) 范围内。让我们将它们转换回来以便清晰查看。

train_image2 = train_image2.transpose((1, 2, 0)) * nd.array((0.229, 0.224, 0.225)) + nd.array((0.485, 0.456, 0.406))

train_image2 = (train_image2 * 255).clip(0, 255)

ax = viz.plot_bbox(train_image2.asnumpy(), train_label2[:, :4],

labels=train_label2[:, 4:5],

class_names=train_dataset.classes)

plt.show()

训练中使用的转换包括随机颜色失真、随机扩展/裁剪、随机翻转、调整大小和固定颜色归一化。相比之下,验证仅涉及调整大小和颜色归一化。

数据加载器¶

训练期间我们将多次遍历整个数据集。请记住,原始图像在馈送到神经网络之前必须转换为张量(mxnet 使用 BCHW 格式)。

方便的数据加载器将非常便于我们应用不同的转换并将数据聚合成小批量。

由于图像中对象的数量变化很大,我们也有变化的标签大小。因此,我们需要将这些标签填充到相同大小。为了解决这个问题,GluonCV 提供了 gluoncv.data.batchify.Pad,它可以自动处理填充。此外,gluoncv.data.batchify.Stack 用于堆叠形状一致的 NDArray。gluoncv.data.batchify.Tuple 用于处理转换函数多个输出的不同行为。

from gluoncv.data.batchify import Tuple, Stack, Pad

from mxnet.gluon.data import DataLoader

batch_size = 2 # for tutorial, we use smaller batch-size

num_workers = 0 # you can make it larger(if your CPU has more cores) to accelerate data loading

# behavior of batchify_fn: stack images, and pad labels

batchify_fn = Tuple(Stack(), Pad(pad_val=-1))

train_loader = DataLoader(train_dataset.transform(train_transform), batch_size, shuffle=True,

batchify_fn=batchify_fn, last_batch='rollover', num_workers=num_workers)

val_loader = DataLoader(val_dataset.transform(val_transform), batch_size, shuffle=False,

batchify_fn=batchify_fn, last_batch='keep', num_workers=num_workers)

for ib, batch in enumerate(train_loader):

if ib > 3:

break

print('data 0:', batch[0][0].shape, 'label 0:', batch[1][0].shape)

print('data 1:', batch[0][1].shape, 'label 1:', batch[1][1].shape)

输出

data 0: (3, 416, 416) label 0: (3, 6)

data 1: (3, 416, 416) label 1: (3, 6)

data 0: (3, 416, 416) label 0: (2, 6)

data 1: (3, 416, 416) label 1: (2, 6)

data 0: (3, 416, 416) label 0: (5, 6)

data 1: (3, 416, 416) label 1: (5, 6)

data 0: (3, 416, 416) label 0: (12, 6)

data 1: (3, 416, 416) label 1: (12, 6)

YOLOv3 网络¶

GluonCV 的 YOLOv3 实现是一个复合的 Gluon HybridBlock。在结构方面,YOLOv3 网络由基础特征提取网络、卷积过渡层、上采样层和专门设计的 YOLOv3 输出层组成。

我们强烈建议您阅读原始论文,以了解 YOLO 背后的更多思想 [YOLOv3]。

Gluon 模型库内置了一些 YOLO 网络,更多正在开发中。您只需一行简单的代码即可加载您喜欢的模型

提示

为了避免在本教程中下载模型,我们将 pretrained_base 设置为 False,实际上我们通常希望通过设置 pretrained_base=True 来加载预训练的 ImageNet 模型。

from gluoncv import model_zoo

net = model_zoo.get_model('yolo3_darknet53_voc', pretrained_base=False)

print(net)

输出

YOLOV3(

(_target_generator): YOLOV3TargetMerger(

(_dynamic_target): YOLOV3DynamicTargetGeneratorSimple(

(_batch_iou): BBoxBatchIOU(

(_pre): BBoxSplit(

)

)

)

)

(_loss): YOLOV3Loss(batch_axis=0, w=None)

(stages): HybridSequential(

(0): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(5): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(6): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(7): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(8): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(9): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(10): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(11): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(12): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(13): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(14): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

(1): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(5): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(6): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(7): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(8): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

(2): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(2): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(3): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(4): DarknetBasicBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

)

)

(transitions): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(yolo_blocks): HybridSequential(

(0): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(1): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(2): YOLODetectionBlockV3(

(body): HybridSequential(

(0): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(1): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(2): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(3): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

(4): HybridSequential(

(0): Conv2D(None -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

(tip): HybridSequential(

(0): Conv2D(None -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=None)

(2): LeakyReLU(0.1)

)

)

)

(yolo_outputs): HybridSequential(

(0): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

(1): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

(2): YOLOOutputV3(

(prediction): Conv2D(None -> 75, kernel_size=(1, 1), stride=(1, 1))

)

)

)

YOLOv3 网络可以通过图像张量调用

YOLOv3 返回三个值,其中 cids 是类别标签,scores 是每个预测的置信度分数,bboxes 是相应边界框的绝对坐标。

训练目标¶

端到端 YOLOv3 训练涉及四种损失。惩罚错误类别/框预测的损失,并在 gluoncv.loss.YOLOV3Loss 中定义

loss = gcv.loss.YOLOV3Loss()

# which is already included in YOLOv3 network

print(net._loss)

输出

YOLOV3Loss(batch_axis=0, w=None)

为了加快训练速度,我们让 CPU 预先计算一些训练目标(类似于 SSD 示例)。当您的 CPU 强大并且可以使用 -j num_workers 来利用多核 CPU 时,这尤其有用。

如果我们将网络提供给训练转换函数,它将计算部分训练目标

from mxnet import autograd

train_transform = presets.yolo.YOLO3DefaultTrainTransform(width, height, net)

# return stacked images, center_targets, scale_targets, gradient weights, objectness_targets, class_targets

# additionally, return padded ground truth bboxes, so there are 7 components returned by dataloader

batchify_fn = Tuple(*([Stack() for _ in range(6)] + [Pad(axis=0, pad_val=-1) for _ in range(1)]))

train_loader = DataLoader(train_dataset.transform(train_transform), batch_size, shuffle=True,

batchify_fn=batchify_fn, last_batch='rollover', num_workers=num_workers)

for ib, batch in enumerate(train_loader):

if ib > 0:

break

print('data:', batch[0][0].shape)

print('label:', batch[6][0].shape)

with autograd.record():

input_order = [0, 6, 1, 2, 3, 4, 5]

obj_loss, center_loss, scale_loss, cls_loss = net(*[batch[o] for o in input_order])

# sum up the losses

# some standard gluon training steps:

# autograd.backward(sum_loss)

# trainer.step(batch_size)

输出

data: (3, 416, 416)

label: (4, 4)

这次我们可以看到数据加载器实际上为我们返回了训练目标。然后很自然地就是一个带有 Trainer 的 Gluon 训练循环,并让它更新权重。

提示

请查看完整的训练脚本以获取完整实现。