图像分类¶

MXNet Pytorch

MXNet¶

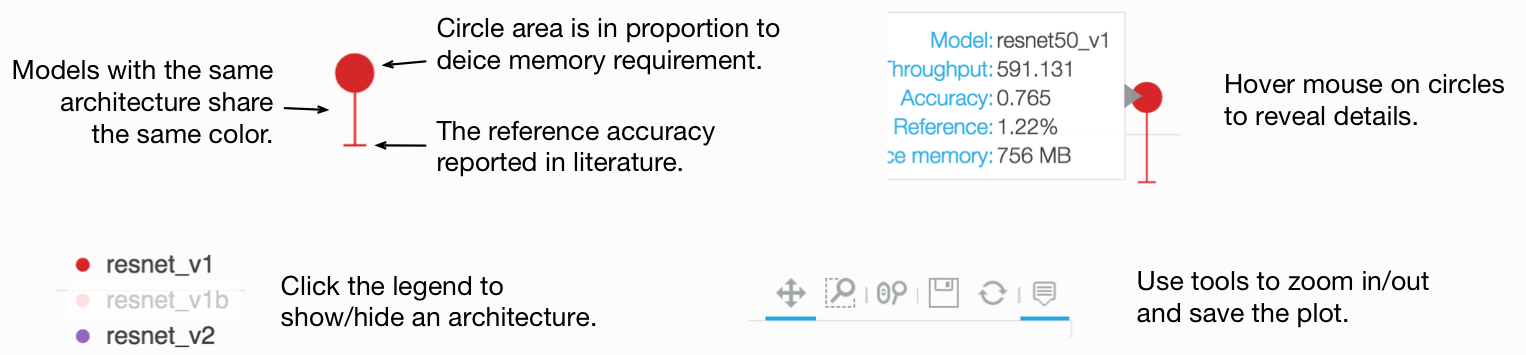

下图展示了 ImageNet 预训练模型的推理吞吐量与验证准确率的可视化。吞吐量是使用单个 V100 GPU 和批量大小 64 进行测量的。

如何使用预训练模型¶

以下示例需要

GluonCV>=0.4和MXNet>=1.4.0。如有必要,请按照 我们的安装指南 安装或升级 GluonCV 和 MXNet。您可以自己准备一张图片,或者使用 我们的示例图片。您可以将图片保存为文件名

classification-demo.png到您的工作目录,或者如果使用其他文件名,则修改源代码中的文件名。使用预训练模型。模型通过其名称指定。

让我们来试试看!

import mxnet as mx

import gluoncv

# you can change it to your image filename

filename = 'classification-demo.png'

# you may modify it to switch to another model. The name is case-insensitive

model_name = 'ResNet50_v1d'

# download and load the pre-trained model

net = gluoncv.model_zoo.get_model(model_name, pretrained=True)

# load image

img = mx.image.imread(filename)

# apply default data preprocessing

transformed_img = gluoncv.data.transforms.presets.imagenet.transform_eval(img)

# run forward pass to obtain the predicted score for each class

pred = net(transformed_img)

# map predicted values to probability by softmax

prob = mx.nd.softmax(pred)[0].asnumpy()

# find the 5 class indices with the highest score

ind = mx.nd.topk(pred, k=5)[0].astype('int').asnumpy().tolist()

# print the class name and predicted probability

print('The input picture is classified to be')

for i in range(5):

print('- [%s], with probability %.3f.'%(net.classes[ind[i]], prob[ind[i]]))

使用 我们的示例图片 得到的输出预计为

The input picture is classified to be

- [Welsh springer spaniel], with probability 0.899.

- [Irish setter], with probability 0.005.

- [Brittany spaniel], with probability 0.003.

- [cocker spaniel], with probability 0.002.

- [Blenheim spaniel], with probability 0.002.

记住,您可以通过替换 model_name 的值来尝试不同的模型。有关模型名称及其性能,请继续阅读下表。

ImageNet¶

提示

训练命令使用此脚本

同一个模型可以使用不同的 hashtag 下载不同训练参数。带有 灰色名称 的参数可以通过传入相应的 hashtag 来下载。

下载默认预训练权重:

net = get_model('ResNet50_v1d', pretrained=True)下载指定 hashtag 的权重:

net = get_model('ResNet50_v1d', pretrained='117a384e')

ResNet50_v1_int8 和 MobileNet1.0_int8 是在 ImageNet 数据集上校准过的量化模型。

ResNet¶

提示

ResNet50_v1_int8是ResNet50_v1的量化模型。ResNet_v1b通过在瓶颈块的 3x3 层设置步长来修改ResNet_v1。ResNet_v1c通过用三个 3x3 卷积层替换 7x7 卷积层来修改ResNet_v1b。ResNet_v1d在残差路径上添加一个步长为 2 的 2x2 avgpool 层来下采样特征图,从而修改ResNet_v1c,以保留更多信息。

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

ResNet18_v1 1 |

70.93 |

89.92 |

a0666292 |

||

ResNet34_v1 1 |

74.37 |

91.87 |

48216ba9 |

||

ResNet50_v1 1 |

77.36 |

93.57 |

cc729d95 |

||

ResNet50_v1_int8 1 |

76.86 |

93.46 |

cc729d95 |

||

ResNet101_v1 1 |

78.34 |

94.01 |

d988c13d |

||

ResNet152_v1 1 |

79.22 |

94.64 |

acfd0970 |

||

ResNet18_v1b 1 |

70.94 |

89.83 |

2d9d980c |

||

ResNet34_v1b 1 |

74.65 |

92.08 |

8e16b848 |

||

ResNet50_v1b 1 |

77.67 |

93.82 |

0ecdba34 |

||

ResNet50_v1b_gn 1 |

77.36 |

93.59 |

0ecdba34 |

||

ResNet101_v1b 1 |

79.20 |

94.61 |

a455932a |

||

ResNet152_v1b 1 |

79.69 |

94.74 |

a5a61ee1 |

||

ResNet50_v1c 1 |

78.03 |

94.09 |

2a4e0708 |

||

ResNet101_v1c 1 |

79.60 |

94.75 |

064858f2 |

||

ResNet152_v1c 1 |

80.01 |

94.96 |

75babab6 |

||

ResNet50_v1d 1 |

79.15 |

94.58 |

117a384e |

||

ResNet50_v1d 1 |

78.48 |

94.20 |

00319ddc |

||

ResNet101_v1d 1 |

80.51 |

95.12 |

1b2b825f |

||

ResNet101_v1d 1 |

79.78 |

94.80 |

8659a9d6 |

||

ResNet152_v1d 1 |

80.61 |

95.34 |

cddbc86f |

||

ResNet152_v1d 1 |

80.26 |

95.00 |

cfe0220d |

||

ResNet18_v2 2 |

71.00 |

89.92 |

a81db45f |

||

ResNet34_v2 2 |

74.40 |

92.08 |

9d6b80bb |

||

ResNet50_v2 2 |

77.11 |

93.43 |

ecdde353 |

||

ResNet101_v2 2 |

78.53 |

94.17 |

18e93e4f |

||

ResNet152_v2 2 |

79.21 |

94.31 |

f2695542 |

ResNext¶

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

ResNext50_32x4d 12 |

79.32 |

94.53 |

4ecf62e2 |

||

ResNext101_32x4d 12 |

80.37 |

95.06 |

8654ca5d |

||

ResNext101_64x4d_v1 12 |

80.69 |

95.17 |

2f0d1c9d |

||

79.95 |

94.93 |

7906e0e1 |

|||

80.91 |

95.39 |

688e2389 |

|||

81.01 |

95.32 |

11c50114 |

ResNeSt¶

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

ResNeSt14 17 |

75.75 |

92.70 |

7e0b0cae |

||

ResNeSt26 17 |

78.68 |

94.38 |

36459074 |

||

ResNeSt50 17 |

81.04 |

95.42 |

bcfefe1d |

||

ResNeSt101 17 |

82.83 |

96.42 |

5da943b3 |

||

ResNeSt200 17 |

83.86 |

96.86 |

0c5d117d |

||

ResNeSt269 17 |

84.53 |

96.98 |

11ae7f5d |

MobileNet¶

提示

MobileNet1.0_int8是MobileNet1.0的量化模型。

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

MobileNet1.0 4 |

73.28 |

91.30 |

efbb2ca3 |

||

MobileNet1.0_int8 4 |

72.85 |

90.99 |

efbb2ca3 |

||

MobileNet1.0 4 |

72.93 |

91.14 |

cce75496 |

||

MobileNet0.75 4 |

70.25 |

89.49 |

84c801e2 |

||

MobileNet0.5 4 |

65.20 |

86.34 |

0130d2aa |

||

MobileNet0.25 4 |

52.91 |

76.94 |

f0046a3d |

||

MobileNetV2_1.0 5 |

72.04 |

90.57 |

f9952bcd |

||

MobileNetV2_0.75 5 |

69.36 |

88.50 |

b56e3d1c |

||

MobileNetV2_0.5 5 |

64.43 |

85.31 |

08038185 |

||

MobileNetV2_0.25 5 |

51.76 |

74.89 |

9b1d2cc3 |

||

MobileNetV3_Large 15 |

75.32 |

92.30 |

eaa44578 |

||

MobileNetV3_Small 15 |

67.72 |

87.51 |

33c100a7 |

VGG¶

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

VGG11 9 |

66.62 |

87.34 |

dd221b16 |

||

VGG13 9 |

67.74 |

88.11 |

6bc5de58 |

||

VGG16 9 |

73.23 |

91.31 |

e660d456 |

||

VGG19 9 |

74.11 |

91.35 |

ad2f660d |

||

VGG11_bn 9 |

68.59 |

88.72 |

ee79a809 |

||

VGG13_bn 9 |

68.84 |

88.82 |

7d97a06c |

||

VGG16_bn 9 |

73.10 |

91.76 |

7f01cf05 |

||

VGG19_bn 9 |

74.33 |

91.85 |

f360b758 |

SqueezeNet¶

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

SqueezeNet1.0 10 |

56.11 |

79.09 |

264ba497 |

||

SqueezeNet1.1 10 |

54.96 |

78.17 |

33ba0f93 |

DenseNet¶

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

DenseNet121 7 |

74.97 |

92.25 |

f27dbf2d |

||

DenseNet161 7 |

77.70 |

93.80 |

b6c8a957 |

||

DenseNet169 7 |

76.17 |

93.17 |

2603f878 |

||

DenseNet201 7 |

77.32 |

93.62 |

1cdbc116 |

剪枝 ResNet¶

模型 |

Top-1 |

Top-5 |

Hashtag |

加速比(相对于原始 ResNet) |

|---|---|---|---|---|

resnet18_v1b_0.89 |

67.2 |

87.45 |

54f7742b |

2倍 |

resnet50_v1d_0.86 |

78.02 |

93.82 |

a230c33f |

1.68倍 |

resnet50_v1d_0.48 |

74.66 |

92.34 |

0d3e69bb |

3.3倍 |

resnet50_v1d_0.37 |

70.71 |

89.74 |

9982ae49 |

5.01倍 |

resnet50_v1d_0.11 |

63.22 |

84.79 |

6a25eece |

8.78倍 |

resnet101_v1d_0.76 |

79.46 |

94.69 |

a872796b |

1.8倍 |

resnet101_v1d_0.73 |

78.89 |

94.48 |

712fccb1 |

2.02倍 |

其他¶

提示

InceptionV3 使用 299x299 的输入尺寸进行评估。

模型 |

Top-1 |

Top-5 |

Hashtag |

训练命令 |

训练日志 |

|---|---|---|---|---|---|

AlexNet 6 |

54.92 |

78.03 |

44335d1f |

||

darknet53 3 |

78.56 |

94.43 |

2189ea49 |

||

darknet53 3 |

78.13 |

93.86 |

95975047 |

||

InceptionV3 8 |

78.77 |

94.39 |

a5050dbc |

||

GoogLeNet 16 |

72.87 |

91.17 |

c7c89366 |

||

Xception 8 |

79.56 |

94.77 |

37c1c90b |

||

InceptionV3 8 |

78.41 |

94.13 |

e132adf2 |

||

SENet_154 14 |

81.26 |

95.51 |

b5538ef1 |

CIFAR10¶

下表列出了在 CIFAR10 上训练的预训练模型。

提示

我们的预训练模型重现了“Mix-Up” 13 的结果。请查阅参考文献以获取更多信息。

表中的训练命令适用于以下脚本

对于标准训练 (Vanilla):

下载 train_cifar10.py对于 mix-up 训练:

下载 train_mixup_cifar10.py

模型 |

准确率 (Vanilla/Mix-Up 13 ) |

训练命令 |

训练日志 |

|---|---|---|---|

CIFAR_ResNet20_v1 1 |

92.1 / 92.9 |

||

CIFAR_ResNet56_v1 1 |

93.6 / 94.2 |

||

CIFAR_ResNet110_v1 1 |

93.0 / 95.2 |

||

CIFAR_ResNet20_v2 2 |

92.1 / 92.7 |

||

CIFAR_ResNet56_v2 2 |

93.7 / 94.6 |

||

CIFAR_ResNet110_v2 2 |

94.3 / 95.5 |

||

CIFAR_WideResNet16_10 11 |

95.1 / 96.7 |

||

CIFAR_WideResNet28_10 11 |

95.6 / 97.2 |

||

CIFAR_WideResNet40_8 11 |

95.9 / 97.3 |

||

CIFAR_ResNeXt29_16x64d 12 |

96.3 / 97.3 |

PyTorch¶

使用 PyTorch 实现的模型将在稍后添加。请参阅我们的 MXNet 实现。

参考文献¶

- 1(1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24)

He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Deep residual learning for image recognition.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770-778. 2016.

- 2(1,2,3,4,5,6,7,8)

He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Identity mappings in deep residual networks.” In European Conference on Computer Vision, pp. 630-645. Springer, Cham, 2016.

- 3(1,2)

Redmon, Joseph, and Ali Farhadi. “Yolov3: An incremental improvement.” arXiv preprint arXiv:1804.02767 (2018).

- 4(1,2,3,4,5,6)

Howard, Andrew G., Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, and Hartwig Adam. “Mobilenets: Efficient convolutional neural networks for mobile vision applications.” arXiv preprint arXiv:1704.04861 (2017).

- 5(1,2,3,4)

Sandler, Mark, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. “Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation.” arXiv preprint arXiv:1801.04381 (2018).

- 6

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” In Advances in neural information processing systems, pp. 1097-1105. 2012.

- 7(1,2,3,4)

Huang, Gao, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q. Weinberger. “Densely Connected Convolutional Networks.” In CVPR, vol. 1, no. 2, p. 3. 2017.

- 8(1,2,3)

Szegedy, Christian, Vincent Vanhoucke, Sergey Ioffe, Jon Shlens, and Zbigniew Wojna. “Rethinking the inception architecture for computer vision.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2818-2826. 2016.

- 9(1,2,3,4,5,6,7,8)

Karen Simonyan, Andrew Zisserman. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” arXiv technical report arXiv:1409.1556 (2014).

- 10(1,2)

Iandola, Forrest N., Song Han, Matthew W. Moskewicz, Khalid Ashraf, William J. Dally, and Kurt Keutzer. “Squeezenet: Alexnet-level accuracy with 50x fewer parameters and< 0.5 mb model size.” arXiv preprint arXiv:1602.07360 (2016).

- 11(1,2,3)

Zagoruyko, Sergey, and Nikos Komodakis. “Wide residual networks.” arXiv preprint arXiv:1605.07146 (2016).

- 12(1,2,3,4,5,6,7)

Xie, Saining, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. “Aggregated residual transformations for deep neural networks.” In Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on, pp. 5987-5995. IEEE, 2017.

- 13(1,2)

Zhang, Hongyi, Moustapha Cisse, Yann N. Dauphin, and David Lopez-Paz. “mixup: Beyond empirical risk minimization.” arXiv preprint arXiv:1710.09412 (2017).

- 14(1,2,3,4)

Hu, Jie, Li Shen, and Gang Sun. “Squeeze-and-excitation networks.” arXiv preprint arXiv:1709.01507 7 (2017).

- 15(1,2)

Howard, Andrew, Mark Sandler, Grace Chu, Liang-Chieh Chen, Bo Chen, Mingxing Tan, Weijun Wang et al. “Searching for mobilenetv3.” arXiv preprint arXiv:1905.02244 (2019).

- 16

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich “Going Deeper with Convolutions” arXiv preprint arXiv:1409.4842 (2014).

- 17(1,2,3,4,5,6)

Hang Zhang, Chongruo Wu, Zhongyue Zhang, Yi Zhu, Zhi Zhang, Haibin Lin, Yue Sun, Tong He, Jonas Muller, R. Manmatha, Mu Li and Alex Smola “ResNeSt: Split-Attention Network” arXiv preprint (2020).