注意

点击此处下载完整示例代码

5. 在 ADE20K 数据集上训练 PSPNet¶

这是一个使用 Gluon Vision 在 ADE20K 数据集上训练 PSPNet 的教程。读者应具备深度学习基础知识,并熟悉 Gluon API。新用户可以首先通过 Gluon 60分钟速成课程 进行学习。您可以立即开始训练 或 深入了解。

立即开始训练¶

提示

您可以跳过本教程,因为训练脚本是完整的,可以直接运行。

示例训练命令

CUDA_VISIBLE_DEVICES=0,1,2,3 python train.py --dataset ade20k --model psp --backbone resnet50 --syncbn --epochs 120 --lr 0.01 --checkname mycheckpoint

有关更多训练命令选项,请运行 python train.py -h 请查看模型库以获取复现预训练模型的训练命令。

深入了解¶

import numpy as np

import mxnet as mx

from mxnet import gluon, autograd

import gluoncv

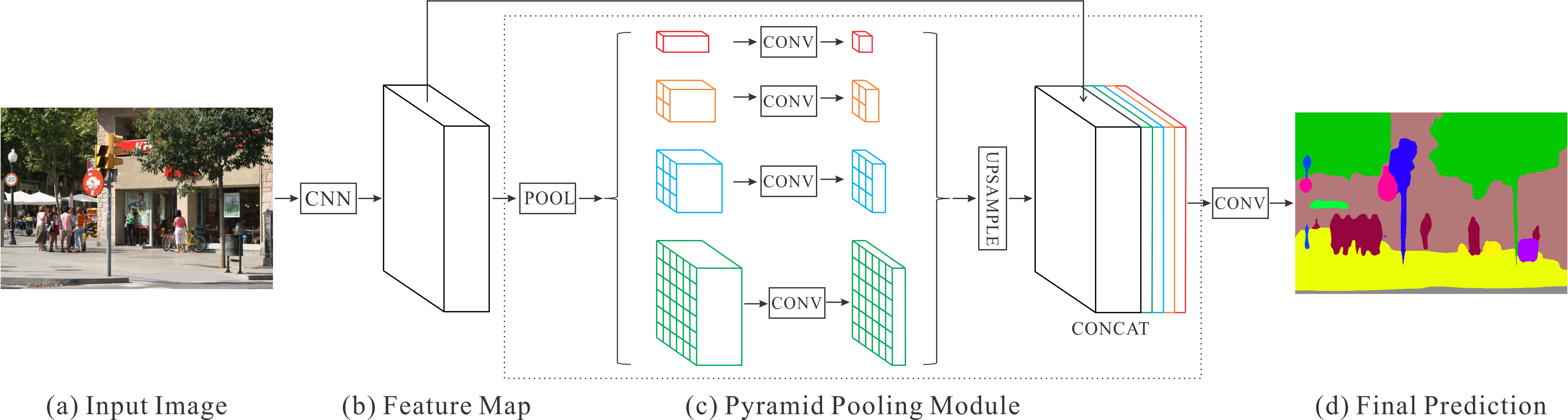

金字塔场景解析网络 (Pyramid Scene Parsing Network)¶

(图片来源 Zhao et al. )

金字塔场景解析网络 (PSPNet) [Zhao17] 利用金字塔池化模块,通过不同区域的上下文信息聚合来发掘全局上下文信息的能力。

PSPNet 模型¶

金字塔池化模块构建在 FCN 的顶部,它通过金字塔池化模块结合了具有不同感受野大小的多尺度特征。它将特征图池化到不同尺寸,然后上采样后拼接在一起。

金字塔池化模块定义为

class _PyramidPooling(HybridBlock):

def __init__(self, in_channels, **kwargs):

super(_PyramidPooling, self).__init__()

out_channels = int(in_channels/4)

with self.name_scope():

self.conv1 = _PSP1x1Conv(in_channels, out_channels, **kwargs)

self.conv2 = _PSP1x1Conv(in_channels, out_channels, **kwargs)

self.conv3 = _PSP1x1Conv(in_channels, out_channels, **kwargs)

self.conv4 = _PSP1x1Conv(in_channels, out_channels, **kwargs)

def pool(self, F, x, size):

return F.contrib.AdaptiveAvgPooling2D(x, output_size=size)

def upsample(self, F, x, h, w):

return F.contrib.BilinearResize2D(x, height=h, width=w)

def hybrid_forward(self, F, x):

_, _, h, w = x.shape

feat1 = self.upsample(F, self.conv1(self.pool(F, x, 1)), h, w)

feat2 = self.upsample(F, self.conv2(self.pool(F, x, 2)), h, w)

feat3 = self.upsample(F, self.conv3(self.pool(F, x, 3)), h, w)

feat4 = self.upsample(F, self.conv4(self.pool(F, x, 4)), h, w)

return F.concat(x, feat1, feat2, feat3, feat4, dim=1)

PSPNet 模型在 gluoncv.model_zoo.PSPNet 中提供。使用 ResNet50 作为基础网络获取用于 ADE20K 数据集的 PSP 模型

model = gluoncv.model_zoo.get_psp(dataset='ade20k', backbone='resnet50', pretrained=False)

print(model)

输出

self.crop_size 480

PSPNet(

(conv1): HybridSequential(

(0): Conv2D(3 -> 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(2): Activation(relu)

(3): Conv2D(64 -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(5): Activation(relu)

(6): Conv2D(64 -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu): Activation(relu)

(maxpool): MaxPool2D(size=(3, 3), stride=(2, 2), padding=(1, 1), ceil_mode=False, global_pool=False, pool_type=max, layout=NCHW)

(layer1): HybridSequential(

(0): BottleneckV1b(

(conv1): Conv2D(128 -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu1): Activation(relu)

(conv2): Conv2D(64 -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu2): Activation(relu)

(conv3): Conv2D(64 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu3): Activation(relu)

(downsample): HybridSequential(

(0): Conv2D(128 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

)

)

(1): BottleneckV1b(

(conv1): Conv2D(256 -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu1): Activation(relu)

(conv2): Conv2D(64 -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu2): Activation(relu)

(conv3): Conv2D(64 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu3): Activation(relu)

)

(2): BottleneckV1b(

(conv1): Conv2D(256 -> 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu1): Activation(relu)

(conv2): Conv2D(64 -> 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=64)

(relu2): Activation(relu)

(conv3): Conv2D(64 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu3): Activation(relu)

)

)

(layer2): HybridSequential(

(0): BottleneckV1b(

(conv1): Conv2D(256 -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu1): Activation(relu)

(conv2): Conv2D(128 -> 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu2): Activation(relu)

(conv3): Conv2D(128 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu3): Activation(relu)

(downsample): HybridSequential(

(0): Conv2D(256 -> 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

)

)

(1): BottleneckV1b(

(conv1): Conv2D(512 -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu1): Activation(relu)

(conv2): Conv2D(128 -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu2): Activation(relu)

(conv3): Conv2D(128 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu3): Activation(relu)

)

(2): BottleneckV1b(

(conv1): Conv2D(512 -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu1): Activation(relu)

(conv2): Conv2D(128 -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu2): Activation(relu)

(conv3): Conv2D(128 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu3): Activation(relu)

)

(3): BottleneckV1b(

(conv1): Conv2D(512 -> 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu1): Activation(relu)

(conv2): Conv2D(128 -> 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=128)

(relu2): Activation(relu)

(conv3): Conv2D(128 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu3): Activation(relu)

)

)

(layer3): HybridSequential(

(0): BottleneckV1b(

(conv1): Conv2D(512 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

(downsample): HybridSequential(

(0): Conv2D(512 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

)

)

(1): BottleneckV1b(

(conv1): Conv2D(1024 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

)

(2): BottleneckV1b(

(conv1): Conv2D(1024 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

)

(3): BottleneckV1b(

(conv1): Conv2D(1024 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

)

(4): BottleneckV1b(

(conv1): Conv2D(1024 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

)

(5): BottleneckV1b(

(conv1): Conv2D(1024 -> 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu1): Activation(relu)

(conv2): Conv2D(256 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(relu2): Activation(relu)

(conv3): Conv2D(256 -> 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=1024)

(relu3): Activation(relu)

)

)

(layer4): HybridSequential(

(0): BottleneckV1b(

(conv1): Conv2D(1024 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu1): Activation(relu)

(conv2): Conv2D(512 -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(2, 2), dilation=(2, 2), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu2): Activation(relu)

(conv3): Conv2D(512 -> 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=2048)

(relu3): Activation(relu)

(downsample): HybridSequential(

(0): Conv2D(1024 -> 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=2048)

)

)

(1): BottleneckV1b(

(conv1): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu1): Activation(relu)

(conv2): Conv2D(512 -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(4, 4), dilation=(4, 4), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu2): Activation(relu)

(conv3): Conv2D(512 -> 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=2048)

(relu3): Activation(relu)

)

(2): BottleneckV1b(

(conv1): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu1): Activation(relu)

(conv2): Conv2D(512 -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(4, 4), dilation=(4, 4), bias=False)

(bn2): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(relu2): Activation(relu)

(conv3): Conv2D(512 -> 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=2048)

(relu3): Activation(relu)

)

)

(head): _PSPHead(

(psp): _PyramidPooling(

(conv1): HybridSequential(

(0): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(2): Activation(relu)

)

(conv2): HybridSequential(

(0): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(2): Activation(relu)

)

(conv3): HybridSequential(

(0): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(2): Activation(relu)

)

(conv4): HybridSequential(

(0): Conv2D(2048 -> 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(2): Activation(relu)

)

)

(block): HybridSequential(

(0): Conv2D(4096 -> 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=512)

(2): Activation(relu)

(3): Dropout(p = 0.1, axes=())

(4): Conv2D(512 -> 150, kernel_size=(1, 1), stride=(1, 1))

)

)

(auxlayer): _FCNHead(

(block): HybridSequential(

(0): Conv2D(1024 -> 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(1): BatchNorm(axis=1, eps=1e-05, momentum=0.9, fix_gamma=False, use_global_stats=False, in_channels=256)

(2): Activation(relu)

(3): Dropout(p = 0.1, axes=())

(4): Conv2D(256 -> 150, kernel_size=(1, 1), stride=(1, 1))

)

)

)

数据集和数据增强¶

用于颜色归一化的图像变换

from mxnet.gluon.data.vision import transforms

input_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize([.485, .456, .406], [.229, .224, .225]),

])

我们在 gluoncv.data 中提供了语义分割数据集。例如,我们可以轻松地获取 ADE20K 数据集

trainset = gluoncv.data.ADE20KSegmentation(split='train', transform=input_transform)

print('Training images:', len(trainset))

# set batch_size = 2 for toy example

batch_size = 2

# Create Training Loader

train_data = gluon.data.DataLoader(

trainset, batch_size, shuffle=True, last_batch='rollover',

num_workers=batch_size)

输出

Training images: 20210

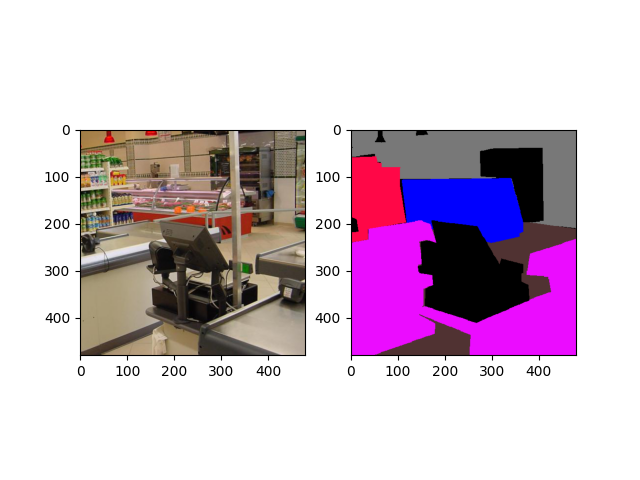

对于数据增强,我们遵循标准的数据增强流程来同步变换输入图像和 ground truth 标签图。(注意,“nearest”模式上采样应用于标签图,以避免弄乱边界。)我们首先将输入图像随机缩放 0.5 到 2.0 倍,然后旋转图像 -10 到 10 度,并根据需要使用填充进行裁剪。最后应用随机高斯模糊。

随机选取一个示例进行可视化

import random

from datetime import datetime

random.seed(datetime.now())

idx = random.randint(0, len(trainset))

img, mask = trainset[idx]

from gluoncv.utils.viz import get_color_pallete, DeNormalize

# get color pallete for visualize mask

mask = get_color_pallete(mask.asnumpy(), dataset='ade20k')

mask.save('mask.png')

# denormalize the image

img = DeNormalize([.485, .456, .406], [.229, .224, .225])(img)

img = np.transpose((img.asnumpy()*255).astype(np.uint8), (1, 2, 0))

绘制图像和掩码

from matplotlib import pyplot as plt

import matplotlib.image as mpimg

# subplot 1 for img

fig = plt.figure()

fig.add_subplot(1,2,1)

plt.imshow(img)

# subplot 2 for the mask

mmask = mpimg.imread('mask.png')

fig.add_subplot(1,2,2)

plt.imshow(mmask)

# display

plt.show()

训练细节¶

训练损失

我们应用标准的逐像素 Softmax Cross Entropy Loss 来训练 PSPNet。此外,如 PSPNet [Zhao17] 中所述,可以在阶段 3 启用 Auxiliary Loss 进行训练,通过命令

--aux。这将会在阶段 3 之后创建一个额外的 FCN “头部”。

from gluoncv.loss import MixSoftmaxCrossEntropyLoss

criterion = MixSoftmaxCrossEntropyLoss(aux=True)

学习率和调度

我们对 PSP “头部”和基础网络使用不同的学习率。对于 PSP “头部”,我们使用 \(10\times\) 基础学习率,因为这些层是从头开始学习的。我们使用一个 poly-like 学习率调度器进行 FCN 训练,它在

gluoncv.utils.LRScheduler中提供。学习率由 \(lr = base_lr \times (1-iter)^{power}\) 给出

lr_scheduler = gluoncv.utils.LRScheduler(mode='poly', base_lr=0.001,

nepochs=50, iters_per_epoch=len(train_data), power=0.9)

用于多 GPU 训练的 DataParallel,仅在 demo 中使用 CPU

创建 SGD 优化器

kv = mx.kv.create('local')

optimizer = gluon.Trainer(model.module.collect_params(), 'sgd',

{'lr_scheduler': lr_scheduler,

'wd':0.0001,

'momentum': 0.9,

'multi_precision': True},

kvstore = kv)

训练循环¶

train_loss = 0.0

epoch = 0

for i, (data, target) in enumerate(train_data):

with autograd.record(True):

outputs = model(data)

losses = criterion(outputs, target)

mx.nd.waitall()

autograd.backward(losses)

optimizer.step(batch_size)

for loss in losses:

train_loss += loss.asnumpy()[0] / len(losses)

print('Epoch %d, batch %d, training loss %.3f'%(epoch, i, train_loss/(i+1)))

# just demo for 2 iters

if i > 1:

print('Terminated for this demo...')

break

输出

Epoch 0, batch 0, training loss 2.556

Epoch 0, batch 1, training loss 4.315

Epoch 0, batch 2, training loss 5.093

Terminated for this demo...

您可以立即开始训练。

参考文献¶

- Long15

Long, Jonathan, Evan Shelhamer, and Trevor Darrell. “Fully convolutional networks for semantic segmentation.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2015.

- Zhao17(1,2)

Zhao, Hengshuang, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. “Pyramid scene parsing network.” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR). 2017.

脚本总运行时间: ( 1 minutes 51.820 seconds)